Running Light ML training on MacBook Air with M2 chip

Goal: see what apples M series chips can do

using training scripts from:

https://github.com/rasbt/machine-learning-notes/tree/main/benchmark/pytorch-m1-gpu

this repo also contains some other cool ML scripts/ python notebooks that can help you mess around when learning about neural nets

I used the `lenet-mnist.py` and `vgg16-cifar10.py` scripts.

These are both non state of the art convolutional neural networks used for image classification. vgg16 is the architecture and cifar10 is the dataset used for training. LeNet is more for educational purposes and doesn’t really require a ton of compute, hence completes too fast to even really compare. VGG16 is more compute intensive and may require a gpu for proper training. as you’ll see what validation scores suck.

PyTorch has added support for Apple's M-series chips through the Metal Performance Shaders (MPS) framework. MPS enables GPU-accelerated computing on M-series chips, and PyTorch leverages this by translating its operations into MPS-compatible commands.

Some commands may not be support yet so set your environment variable `export PYTORCH_ENABLE_MPS_FALLBACK=1` in your terminal to enable CPU fallback for unsupported operations

Here are the commands

python3 vgg16-cifar10.py --device "mps"

python3 vgg16-cifar10.py --device "cpu"

To optimize GPU utilization increase your batch sizes. Larger batch sizes allow more data to be processed simultaneously, improving throughput and reducing idle time. However, watch your memory usage in activity monitor or PyTorch's memory profiling utilities. If you memory utilization is too high, this can cause swapping (where data is moved to disk). Swapping significantly degrades performance due to slower disk I/O

Here are the activity monitor and training results for the CPU

note: 400% probably means its using 4 of the cores

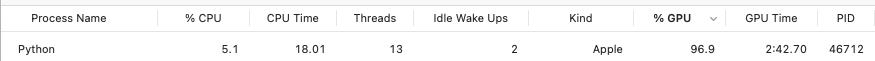

Here are the activity monitor and training results using apples GPUs via MPS

This goes to show how well pytorch is written that with basically no effort, you can optimally use the resources of your Mac air after just writing the “logic” of the network for training. The speed up with a MacBook Air M2 GPUs was very impressive even if vgg16-cifar is more academic in nature. Unsure how to see how many of the GPU cores are being used

Its doubtful you’d ever use these in production environments, but fun and useful when tinkering around with neural nets on your laptop without having to be on the cloud.

I haven’t tested this out for interference yet, but the unified nature of Apples memory means you don’t have to worry about data transferring between cpu and gpu, latency and memory utilization is probably much better as well. Apple allowing you to slap up to 512gb or even 1Tb of RAM on their M chips probably make them uniquely good at interfering vs other laptops or off the shelf computers. Obviously you're not gonna beat NVIDIA clusters. But, for consumer products apple kinda fumbled into a success here. Making a cluster with some M-chip Mac minis loaded up with ram would be a fun project to see how much you could push them. At 600 bucks for the new Mac minis its a remarkable deal for running a simple home server for fun.